MediaLoom: An Interactive

Authoring Tool for Hypervideo

John Tolva

"We may say most aptly that the Analytical Engine weaves algebraical patterns just as the Jacquard loom weaves leaves and flowers."

-- Ada Augusta, Countess of Lovelace, 1843

Abstract

MediaLoom is a cross-platform visual authoring tool for creating hypervideo. The hypervideo media form is a sub-genre of hypermedia composed primarily of digital video and based on the link-node structure of hypertext. Previous to MediaLoom, hypervideos were created by hand-coding the behavior of the nodes and links into a script file which was read by the Hypervideo Engine runtime code developed by Nitin Sawhney. The scripts required by the runtime were prone to hard-to-debug syntax errors and lacked any way of providing a visual overview of the hypervideo project. MediaLoom generates script files automatically using a graphical environment modeled on the Storyspace hypertext authoring tool. Though the functionality of the Hypervideo Engine runtime has not been upgraded by MediaLoom, the ease of authoring for the environment has been greatly simplified.

Also described herein is the creation of the Short Cuts hypervideo, the first demonstration of a MediaLoom-authored hypervideo, and the progress-to-date on Letterbox, the long-term hypervideo project that catalyzed the development of MediaLoom. The complexity of Letterbox required that its developers think beyond the current implementation of MediaLoom. Thus, this paper includes a discussion of new interaction modalities and features required for future development of a much-improved runtime and authoring tool. Lastly, since hypervideo has primarily been used for the construction of interactive narratives (and since this is the use for which MediaLoom was built) the conclusion of this essay offers a short analysis of narrative flow and the viewing experience in a hypervideo environment.

Keywords

hypervideo, hypertext, hypermedia, interactive narrative, storytelling, storyspace, high-level authoring tool, storyboarding notation

I. Introduction

Early in 1997 Derek Bambach and I developed a hypervideo using the Hypervideo Engine developed by Nick Sawhney. This project, called the Orbital Hypervideo and based on video from an interview with a British techno-band, was only the second major hypervideo ever created with Sawhney’s system. We were interested in hypervideating the interview to allow the viewer to navigate thematic sections which were not connected chronologically. Though the restructured interview was successful from the user’s perspective, the actual creation of the hypervideo required an inordinate amount of effort. MediaLoom, in part, is the result of thinking about a way to ameliorate some of the difficulty of authoring the Orbital hypervideo.

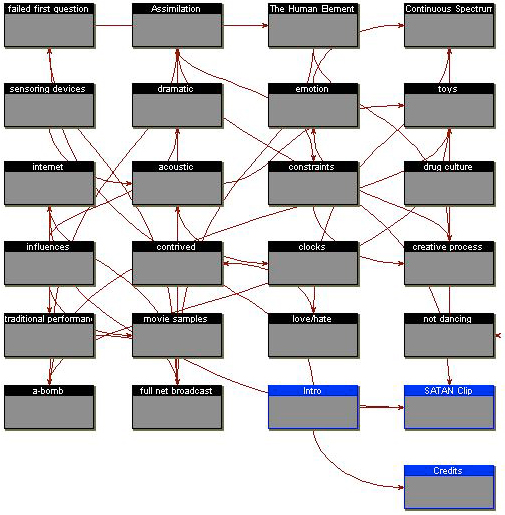

The authoring process had many disparate stages. After digitizing the analog videotapes and splitting the clips into usable segments, we taught ourselves the hypervideo scripting protocol as formulated by Sawhney. We studied the scripts for the only available hypervideo – HyperCafe, by Sawhney, David Balcom, and Ian Smith – tinkering with it to see how changes were effected in the actual hypervideo. Next, we created a Storyspace document [see figure 1] as a kind of hypertextual storyboard. Each writing space corresponded to an individual Quicktime video clip. As the Hypercafe team had demonstrated during their development process, Storyspace was a very adequate tool for visualizing the structure of hypervideo. Links could easily be made from space to space, in effect, substituting video clips for lexias.

Figure 1: Orbital Hypervideo Storyspace document

However, this node-to-node level of granularity was not fine enough; we needed to depict hypervideo links arriving and departing video clips at specific times during their playback. Though Storyspace allows the insertion of Quicktime video clips into individual writing spaces, it does not permit linking to a particular moment in the clip itself; the video clip in Storyspace is not a part of the hypertextual network. We thus had to use a stopwatch to log the departure and arrival times for the clip-to-clip links. In each writing space we inserted a still image of the video clip (to save disk space) as well as link departure and arrival times textually linked to the nodes for other clips.

This process of playing each video clip in order to manually record possible link times (both inbound and outbound) then transcribing them to Storyspace was lengthy and tedious. Storyspace did provide a workable framework for organizing the relationships between clips in our hypervideo. What we longed for, though, was a Storyspace for Hypervideo, a way of linking video clips directly inside the nodal arrangement of Storyspace. This was especially true when we moved to the final stage of development. Transferring our custom notation and our mm:ss time code to the cryptic syntax of the Hypervideo engine was an error-prone process. The code for a single node code easily look like this:

on MainVideoScript

showText "Think inside the box.", "bottom", "right"

playVideo "nilos6.mov", 0, 0, 5, 1, 1

removeText "bottom"

showText "I see I have met Agent 99.", "top", "center"

tempoLinks [3:"Marti_meets_Luke", 5:"Luke_on_the_rails", 7:"circuit_board", 10:"MainVideoScript"]

playVideo "circuit1.mov", 5.5, 7.3, 3, 1, 0

removeText "top"

showText "Panning", "top", "right"

playVideo "marti2.mov", 0, 0, random(9), 1, 1

removeText "top"

endVideo

end MainVideoScript

In Sawhney’s Masters project paper he outlines a few of the possibilities for future work with his Engine. As an introduction to the idea of an interactive authoring tool Sawhney writes, "Currently, scripting is a somewhat tedious activity, and scripts do not easily convey a holistic representation of the overall hypervideo expression. Link opportunities and temporal/spatial attributes of the scenes are defined in scripts, but without an appropriate visualization, an author may find it difficult to conceptualize or modify the dynamic structure and navigational paths in the hypervideo." I have seized upon this notion of a high-level interactive authoring tool and have created MediaLoom to function as a Storyspace-like "front-end" for the Hypervideo Engine code.

II. Foundational and Related Work

The Hypervideo Engine

Since MediaLoom is designed to generate scripts to be used exclusively with the Hypervideo Engine runtime it obviously owes a great deal to the structure and functionality of Sawhney’s artifact. The Hypervideo Engine was built in 1996 as a standalone environment for creating and running a new media form (co-conceived by Nick Sawhney and David Balcom) called hypervideo. Based explicitly on a hypertextual link-node arrangement, hypervideo is a medium for computer-based narrative created by an interactive montage of text and video clips presented at a fraction of full screen size (as contrasted with Videodisc installations or kiosk applications). Links are mainly temporal (that is, indexed to a discrete moment in time), though textual links are available as well. Unique to this kind of interactive video is the enforced temporal flow. Sawhney writes, "video sequences play continuously, and at no point can they be stopped by actions of the user." Though hypervideo is designed to be viewed at a computer its interface de-emphasizes the traditional elements of desktop-metaphor interactivity: menus, buttons, icons, etc. Rather, the interface is formed by the video clips themselves. [See figure 2.] Simple mouse-based navigation amongst continually moving video clips is a viewer’s only modality of interaction.

Figure 2: screenshot from the Orbital Hypervideo

The Hypervideo Engine was built in Macromedia Director version 4.0. Sawhney refers to it as "a generalized content-independent implementation of the hypervideo framework." The Engine is a standalone application that runs on the Macintosh PowerPC platform. It is strictly a runtime application in the sense that it plays no part in the generation of the textual script files or media files that it acts upon when running the hypervideo. Sawhney’s code lacks many desirable features for a robust hypervideo system (discussed in section V, below), but it is a very useful starting point.

The Engine allows three different link types. Traditional hypertext is given a temporal dimension in the hypervideo framework. Words appear on screen for a limited duration of time, in effect making the text as ephemeral and fleeting as the video itself. The temporal link exists within the video frame as well. A single video clip may contain several outbound links, each spatially "hot" across the entire surface of the clip, but limited in duration to a specific number of seconds. These temporal links are also manifest as "opportunities" outside of the immediate visual field. The hypervideo framework allows "preview" clips to appear adjacent to the currently playing clip. These previews give a sense of where a link in the main clip might lead if followed. At present the Engine allows no type of intra-frame spatial linking.

The Engine itself works in conjunction with a small initializer application (also written in Director) which generates a preference file that is read at runtime. The initializer allows the author or user to set environment variables like font attributes, bookmarking, video skipping, and so on. In addition to reading the script and preference files, the Engine also generates a file called the interaction log. Recording videos previewed, links traversed, and the time of each action, the log is a flat text history of the viewer’s interaction with the hypervideo.

Clearly Sawhney and Balcom envisioned an interactive authoring tool as a complementary application to the Engine runtime. Sawhney outlines a very robust tool that would aid in all phases of development: conceptualization, video production, editing and post-production, linking, and playback previews. While MediaLoom does not address all of these areas, it does offer an intermediary step to the tool described by Sawhney. It is, in fact, closer in functionality to the kind of tool described by Balcom. In designing HyperCafe Balcom used two applications: a "hyperscripting tool" (Storyspace) and a prototyping tool (Director). Two separate applications were required because "at present the hyperscript tool cannot play video, and the Prototyping tool by itself cannot adequately display connections between video clips or present an adequate visual overview of the entire system." Balcom envisioned a tool that combines these two stages with the final stage of "Hypervideo Markup" or script generation.

Storyspace

It is tempting to wonder whether a visual authoring tool is indeed valuable in the creation of a hypervideo artifact. After all, the most ubiquitous hypertext of all, the World Wide Web, is still largely created by hand-coding the markup in some kind of (often glorified) text-processor. WYSIWYG editors, it might be argued, do not in general offer the degree of control that hand-coding provides. The counter-argument to this line of reasoning and the single greatest influence on the interface design for MediaLoom can be found in Storyspace, the hypertext authoring environment developed by Jay Bolter, Michael Joyce, and John Smith. Though the dominant paradigm for hypertext has shifted to the page-hyperlink structure of the World Wide Web, Storyspace remains an excellent mechanism for creating and visualizing complex interlinked structures.

In Storyspace the author can create writing spaces which contain text, images, video and other writing spaces. Storyspace’s great contribution to interface design is the ease with which the author may view the relations between items. A single screen can indicate linking using directional lines extending from space to space as well as by showing shrunken spaces within the larger spaces, connoting depth. In addition, Storyspace supports a number of viewing options. Charts, lists, outlines, and maps offer the reader and writer multiple ways of conceptualizing the hypertext document. As the product documentation notes, "Storyspace is designed for the process of writing." (In the context of Storyspace the reader or "user" can also participate in this process.) The malleability in the composition phase inevitably produces a more dynamic artifact when it is finally presented to the reader. Storyspace documents can be delivered in a few different runtime configurations or they may be viewed in the original authoring system format.

As noted previously, Storyspace is primarily a tool for the creation of hyperlinked text. Other media elements are integrated only as a captioned illustration would be in a printed artifact. Non-textual media elements lie outside of the hyperlinking framework of Storyspace. They are independent entities that provide no opportunity for interaction. This drawback mostly precludes Storyspace from becoming a tool for complex hypermedia. Still, in projects such as HyperCafe, The Orbital Hypervideo, and others, Storyspace has proven that it works well as an interactive storyboarding tool for the preliminary stages of hypermedia development.

The Möbius Engine

The most recent hypermedia application with a genealogy partially traceable back to Storyspace is the Möbius Engine developed by Mikol Graves and Ryan Todd. This Engine, implemented in the Macintosh scripting application called SuperCard, is a full hypermedia authoring system. According to its developers, the Möbius Engine is an interactive filter situated on top of "unedited, unmediated source material". The active "participants" (both readers and authors) use the Möbius Engine to experience the media "indexically, topographically, and hypertextually."

The Möbius Engine, though perhaps less a progenitor of MediaLoom than Storyspace, is notable for its total merging of the authoring and the reading process. The authoring tool and the runtime environment are identical. Raw, unlinked media can be arranged (by an author) or browsed (by the reader) – though both activities are essentially the same. The importance of the Möbius Engine for MediaLoom is the incorporation of non-textual media elements in a self-consciously hypertextual environment. That is, the dominant system of organization is based on a node-link architecture rather than on cinematic (Director), typographic (SuperCard, WWW) or flowchart (Authorware) metaphors. As in Storyspace, the Möbius Engine easily provides visual overviews of the interlinked media elements.

The VisualSHOCK and V-Active Xtra and the T.A.G. Editor

Macromedia’s popular multimedia environment, Director, is at first glance an attractive tool for hypervideo. It is ubiquitous, cross-platform, stable, and extensible. Moreover, Director is based on a cinematic metaphor, arranging elements as frames, scores, casts, stages, and puppets. Two factors, though, contribute to Director’s fundamental inability to natively support hypervideo authoring. The first problem is the incessant linearity of the medium. Originally designed as an animation tool, Director’s score marches ever-onward from frame to frame. Non-linear, multi-linear, and cyclic behavior can be achieved in Director, but the author is constantly working against the forward motion of the playback head. More problematic for the hypervideo author is the lack of support for complex video interaction in Director. Quicktime video clips are not integral pieces of Director’s media structure in the way that image files, audio, and text are. Elements and actions within the video clip can not be manipulated by Director, a fact which precludes spatial linking. This would be akin to Storyspace disallowing word-to-word linking and allowing only writing space-level linking. Also, the temporal flow of Director’s playback head often conflicts with the tempo of the digital video. Scripting video interaction in Director is at present a complex task. This difficulty is precisely what impelled Sawhney to build the standalone Hypervideo Engine.

There are, however, a few emerging technologies which seek to supplement Director’s video-handling capabilities. Though not a part of the creation of MediaLoom, these products acted as conceptual models for how to implement linking between video clips. Two of the products are Xtras, Macromedia’s name for third-party applications which "plug-in" to the architecture of Director itself. Both V-Active and the VisualSHOCK Xtra expose user-defined elements within the video clip frame to the control of Director’s scripting language, called Lingo. Thus, the granularity of interaction with the video is increased immensely. Similar to image maps on the World Wide Web, these enhanced videos could contain multiple tracking hotspots indexed to a region of the clip and the flow of time – an idea similar to the unimplemented spatio-temporal link in the Hypervideo Engine. [See figure 3.]

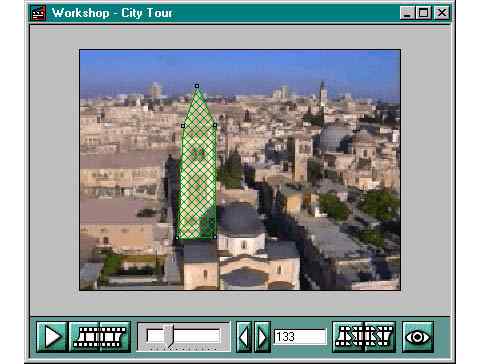

One final product, called T.A.G., does not interface with Director. Calling itself "The Future of Storytelling", T.A.G. is a temporal annotation generator, a tool for creating interlinked media mainly for distribution on the web. The T.A.G. editor generates movies to be viewed in the T.A.G. player or via RealNetworks streaming media players. Though the T.A.G. editor does not support the spatial linking mechanisms of the Xtras discussed above, it is a model for MediaLoom in that is exists as a standalone application independent of the code required for playback at runtime. T.A.G. is clearly geared towards online application development and so is subject to the low-quality video designed to occupy limited bandwidth. The hypervideo media form depends less on networked interactivity than it does on high-quality video and thus T.A.G. is useful as a model only.

Figure 3: The V-Active Spatial Link Tool

III. Prototype Development and Interface Design

The design of MediaLoom was constrained in a number of ways. Since functional interoperability with the Hypervideo Engine was an essential design concern, MediaLoom set out to offer visual means of creating the textual script files. In addition, MediaLoom had to be able to read old manually-generated script files for further editing in the graphical environment. Thus the interface and functionality had to be reverse-engineered from the existing Hypervideo Engine. Operations not supported by the runtime could not be built into the MediaLoom tool without being rendered inactive or grayed-out. Initially MediaLoom was meant to give access to every aspect of the Hypervideo Engine’s runtime features. In the end, time constraints and programming problems forced an essential subset of runtime functionality to be chosen and implemented. This subset includes:

The final two elements are actually features of the former Hypervideo Initializer which have been subsumed into the new authoring tool. The most notable functions supported by the runtime but missing from MediaLoom are listed below. It is hoped that future versions of MediaLoom will support this functionality.

MediaLoom expands on the Hypervideo Engine in ways not associated with the actual playback of video clips. For example, a visual log reader interprets the text files generated by a hypervideo viewing session. The log reader offers a flowchart of the viewer’s interaction in the hypervideo. Future versions of the Hypervideo Engine runtime will likely include the ability to run scripts stored on a remote server and to send interaction logs back to the server. Thus it seemed appropriate to enable remote downloading of interaction logs in the MediaLoom framework. In this configuration, MediaLoom can retrieve and catalog the various interaction logs uploaded to the website by the Engine. This could potentially enable gathering of real usability data since the logs clearly show the path taken by the reader/viewer. Though operational, this feature is not really feasible until a fully networked version of the Engine runtime is developed.

Figure 4: the Hypervideo Engine compared to MediaLoom

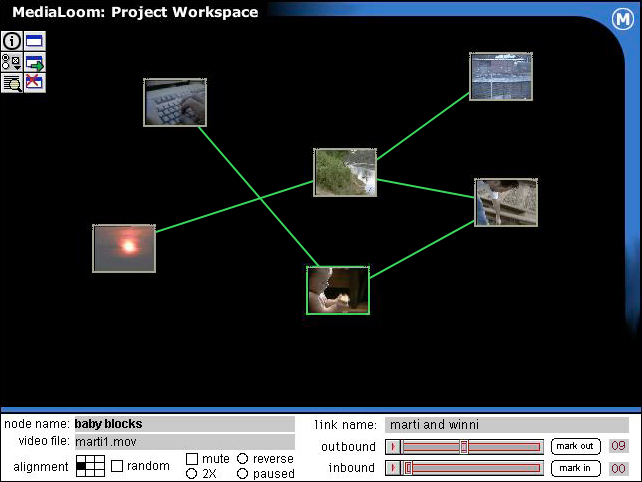

MediaLoom partially borrows the aesthetic of the Storyspace environment. Though MediaLoom does not currently allow the creation of structural depth in the way that Storyspace can nest writing spaces inside one another, the project workspace uses the node-link metaphor familiar to users of Storyspace. A small palette [see figure 5] on the left of the screen offers simple tools to the author.

Figure 5: MediaLoom tool palette

The project information, settings, and log reader screens are also accessible from the tool palette.

Video "nodes" resemble the simple rectangle of the writing space and can be created by clicking on a button similar in function to the Storyspace button for creating a new writing space. Adding a node immediately opens a dialog box asking for the video file to be contained in the node. A miniaturized version of the clip is then displayed in the project workspace. [See figure 6.] This clip can be given a name in the information strip at the bottom of the workspace. In addition, when highlighted, the clip can be played-back on screen, albeit at diminished size. Nodes can be moved around on the screen and grouped thematically or in narrative sequences. Nodes can also be removed using the delete tool. At present Director supports a maximum of 24 video nodes on screen. This limitation will hopefully be addressed in a future version of MediaLoom which capitalizes on the extended video capabilities of Director 6.5.

Figure 6: MediaLoom Project Workspace

Linking is a multi-step process: 1) select the outbound (departure) node; 2) click the link button on the tool palette; 3) select the inbound (arrival) node; 4) using the video sliders, choose a mark out time for the departing link and a mark in time for the arrival node; 5) name the link, if desired. Departing links are active from the end of the previous link to the mark out time. Link times can be entered by hand if they are known beforehand.

Each node contains a list of off-screen information which , when the project is saved, is used to generate the script necessary to run the hypervideo in the Hypervideo Engine. Extra information not utilized by the Engine (such as link names, project copyright info, etc.) is stored as commented text in the script file and is ignored by the runtime. When importing manually created script files MediaLoom cleanly ignores (but does not delete) functionality that it does not support. MediaLoom is completely cross-platform between Windows 95/NT and the Macintosh PowerPC. However, since the runtime is Macintosh-only scripts generated on a PC must be moved to a Mac for testing.

IV. MediaLoom Applied: The Short Cuts Hypervideo

The video critic Michael Nash believes that interactive video authors can learn much from traditional modes of storytelling. Specifically he refers to the director Robert Altman whose film work "follows the trails of coincidence, tangent, and narrative association … in ‘sequential parallel’" – a kind of linearized multi-linearity enforced by the medium of celluloid and sprockets. In particular Nash points to Altman’s 1994 film Short Cuts as "a model of how a technologically interactive narrative might work to take us beyond technofetishistic games with computers into a spiritual journey."

Short Cuts is a clear cinematic forerunner of hypervideo. Altman takes great care to interweave nine short stories by Raymond Carver so that the individual storylines all seem to be happening simultaneously. The camera merely swerves in and out of these narrative streams encountering characters who also are able to move from story to story. It is as though we are watching a computer monitor over the shoulder of Altman who is directing the flow of a hypervideo. Short Cuts lends itself quite well to hypervideation for two reasons: 1) the film anticipates many of the storytelling strategies intrinsic to the hypervideo form; 2) there are associations and tangents that are obscured by the linearity of the filmic medium which might be highlighted (or discovered new) in the medium of hypervideo.

The idea of using Short Cuts as a demonstration of hypervideo was suggested as a possible future use of the Engine in a paper by David Balcom called "Hypervideo: Notes Toward a Rhetoric". I am not, however, using the film clips as examples of the functionality of the engine. Rather I will use the Short Cuts hypervideo as an illustration of the simplified authoring experience afforded by MediaLoom’s interface.

A hypervideated Short Cuts seems a perfect illustration of Grahame Weinbren’s "Ocean of Streams of Story". Weinbren, taking a cue from Salman Rushdie’s Haroun and the Sea of Stories, asks "Can we image the Ocean as a source primarily for readers rather than writers? Could there be a ‘story space’ (to use Michael Joyce’s resonant expression) like the Ocean, in which the reader might take a dip, encountering stories and story-segments as he or she flipped and dived. . . . What a goal to create such an Ocean! And how suitable an ideal for an interactive fiction!" Though only certain elements of Altman’s three-hour film have been digitized and hypervideated, the Short Cuts hypervideo can be considered a small body of fluid narrative feeding into the larger Ocean of Streams of Story.

V. A Future for Hypervideo: Letterbox and a new Engine

MediaLoom is the first step in a larger project to develop a "feature-length" hypervideo work called Letterbox. This hypervideo is a based on a screenplay written explicitly for the new medium by myself, David Balcom, and Phil Walker. Starting with nine words – regret, drift, building, memory, word, frame, screen, line, crash – Letterbox builds divergent and convergent storylines which touch asymptotically on three main characters. The Letterbox project team is currently completing the treatment of the plot and is beginning to script the characters' dialogue fully. Filming will likely begin in July of 1998. By then we hope to use an improved version of MediaLoom as the authoring tool for a series of Letterbox prototypes.

MediaLoom began as a way for the authors of Letterbox to cobble together narrative sequences for the Hypervideo Engine quickly and without having to script the nodes and links by hand. However, as Letterbox grew in complexity and in scope it has become clear that a much more robust implementation of the Hypervideo Engine and, consequently, of MediaLoom was required. Conceptually, Letterbox moves beyond the node-to-node linking offered by the current Engine. What follows is a description of three new modalities for hypervideo that a future version of the Hypervideo Engine should support.

The first new modality is called "pause-and-launch." In it a single video clip plays on the screen. If the viewer declines to interact with the visual field, the clips (each of which represent a single "shot" without internal cuts or edits) simply play out, replacing one another in the same visual field in exactly the same way as cinematic cuts play out on a screen. However, if the viewer should click the mouse pointer on the video, the clip immediately pauses on the current frame and "launches" the next clip in an area of the screen not occupied by the original clip. The pause-and-launch modality allows the viewer to do two things: 1) to initiate the "cuts" placed by the author in the video; 2) to spread the sequence of cuts out spatially rather than temporally. It may help to think of the cinematic cut in terms of hypertext. The cut can be considered a link that has been flattened out; the unflattened link (as in hypertext) may be ignored whereas the cut cannot. Cuts link shot to shot and the traversal of this link in film is not avoidable. What is unique about this kind of hypervideo is that clicking on a video clip creates a pictorial montage of shots and allows a kind of visual history of all the previous scenes leading to the currently playing clip. The pause-and-launch modality is inherently linear; clicking in the visual field merely preempts the playing clip and begins the next clip in the sequence, relocating it spatially.

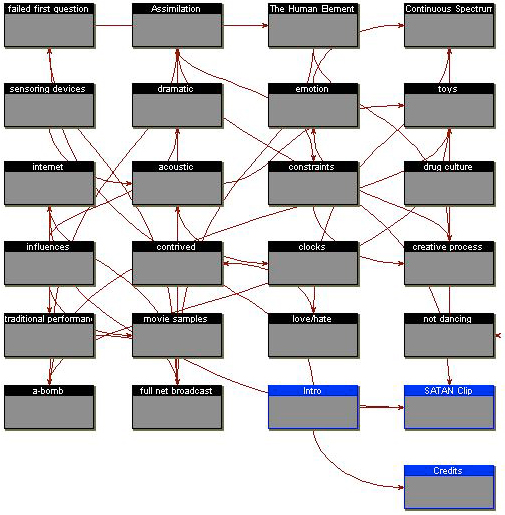

Another hypervideo modality, called "satellite stills", offers a central video clip that is orbited by a series of still images which are, in essence, temporal previews of links to related material: tangent story lines, flash-forwards, flash-backs, etc. The stills fade in and fade out, cycle and return, according to the temporal location on the playing video clip. Clicking on any one of these orbiting images slides it into centrality and dynamically generates a new set of satellite images. This modality reflects a more web-like structure than the pause-and-launch variety, though of course the two modalities can be used together.

The last new hypervideo modality is known to the Letterbox team as the "character slider." In this modality, there are distinct, parallel streams of video playing back in lockstep. Each stream follows the actions of a single character. The viewer can only access one stream at a time, but he or she can slide between the streams by clicking and dragging left and right in the visual field. New streams enter the visual field as slow dissolves rather than as cuts so that the viewer initiates smooth transitions between video streams, creating unique visual intersections unplanned by the author.

In addition to new conceptual modalities, there are technical improvements which should be a part of a new MediaLoom and Hypervideo Engine. To begin, the new Engine should be fully cross-platform. MediaLoom is a step in this direction, but without a runtime for the PC authoring on a Windows machine is less than optimum. As discussed previously, the new Engine should be fully networked so that scripts (if not media) can be downloaded from a centralized script repository and seamlessly integrated into the Engine. This would enable a kind of serialized hypervideo where authors could update and add new scripts remotely. A central hypervideo script repository could also act as a repository for the interaction logs generated from local instances of the Engine. With the permission of the viewer these scripts could be uploaded to the server for collation and comparison.

To remain a viable narrative medium the theory and technology of hypervideo must be improved. These suggestions for new interaction modalities and for new technological options – along with the development required by Letterbox – together lay the groundwork for further exploration of this nascent medium.

VI. From Author to Viewer: Notes on Hypervideo Narrative

What exactly did Michael Joyce mean when he claimed that "[hypertext] is the revenge of the word on television"? Joyce’s statement, I believe, was (and remains) a comment on the relation of interactivity to the concept of choice. Where television – and its kindred medium, video – offers to the viewer an endless choice of channels (a few hundred at last count) and versions (colorized, letterbox, director’s cut, etc.) it offers little in the way of active viewer engagement. Unlike hypertext, the televisual image does not "aspire to . . . its own reshaping," does not and cannot distinguish between viewer interaction and inaction. Where hypertext invites the user to initiate branching and redirection, television seems to exist as an unalterable flow of moving imagery. Moreover, hypertext exacts "revenge" on television by re-asserting the visual nature of the word, foregrounding the materiality of the (clickable!) word-as-thing rather than the word-as-vessel-of-thought.

Joyce’s declaration is a rich point of departure because it suggests a tension, though not an incompatibility, between hypertextuality and televisuality – two of the explicit characteristics of the hypervideo media form. The goal of this is section is to begin a study of the narrative mechanism in hypervideo by comparing theories of subjectivity developed in response to the various artistic genres from which it has evolved, specifically film, television, and hypertext. This section serves, in essence, as a genealogy of hypervideo.

Multiplicity and simultaneity are key elements in hypervideo. Thus, the single presentation of a full-screen image and long, continuous streams of video are extremely rare. Though some radical modalities exist, the underlying structure of hypervideo emulates the link-node arrangement of hypertext, substituting (sometimes in an ungainly way) the discrete video clip for the discrete lexia. Hypervideo is a true computer-based media form, not only technologically but aesthetically. Rather than aspire to a quality of cinematic (or VR-like) immersion, hypervideo presents a field of video clips to be looked at and treated in relation to one another, not unlike the elements of the interface of the computer itself. These hypermediated, self-consciously digitalized video clips operate as a montage in the spatial, pictorial sense rather than in the temporal, Eisensteinian sense.

How then does hypervideo differ from "interactive cinema"? Perhaps the foremost practitioner of interactive cinema is the artist Grahame Weinbren. Like many of the first video artists to offer user interactivity by way of digital control of analog media, Weinbren is skeptical of the computer as a tool and as a presentation space. He clearly does not view the computer as a new artistic medium. "Digital media," he writes,

offer limited motion, inferior little images in a fraction of the monitor screen. Images are already inferior on monitors compared with light projected through transparent celluloid. Not even fast enough to give a real ‘persistence of vision,’ the irony of the product name ‘Quick Time’ is lost in a babble of hype, the chatter of metaphors for leisure and freedom and non-accountability that pepper the world of computer technology. Quick Time movies are slow and inferior. It is hard to imagine them carrying the power of the cinematic in their stopwatch-sized rectangles.

Digital video clips, in this formulation, are triply inferior to the filmic image: not only do they lack the radiance of projected light but they are too small and too slow. Weinbren’s notion of interactive cinema is thus explicitly tied to the dominant visual aesthetic, if not the technologies, of the cinema. In contrast, hypervideo as a media form is predicated on digital video viewed on a computer monitor. The small size of the video clip creates options (like spatial contiguity and overlapping) rather than foreclosing them. Similarly, the sometimes jerky motion and strange compression artifacts endemic to digital video of this kind are treated as aesthetic properties rather than as deficiencies of form. According to Weinbren, the "power of the cinematic" can be technologically located. The size, speed, and brightness of the cinematic image are at least as important as the narrative elements, visual composition, and editing of the filmed artifact. The power of hypervideo has a similar technological component. Hypervideo, however, derives its power not from a singular, immersive visual field but from the relationships created between disjunctive visual fields as initiated by the viewer’s interaction with these fields.

How is the viewer’s subjective relationship to the hypervideo produced and sustained? To answer this we must first remind ourselves of the mechanisms of subject construction at work in the three media forms most directly related to hypervideo: film, television/video, and hypertext. Classical film editing constantly works to mend the gaps in the viewer’s subjectivity created by the changing camera position and the sequence of edits. For example, a single shot – say, a medium shot framing two people conversing – may elicit in the viewer a response which jolts him or her into the recognition of the visual field as a constructed artifact rather than as a transparent index of reality. Since the viewer cannot account for the fact that he or she is witness to the scene as an unseen third party, a gap in the viewer’s relationship to the film opens up. There is the suggestion of another, unrepresented presence, an "absent one" who usually occupies the space of the camera (what Jean-Pierre Oudart calls "the fourth side"). Traditional cinematic editing attempts at all costs to repair this fissure, to suture the wound inflicted by the gaps and disjunction in the signifying chain. This repair (albeit a temporary one) is usually effected by a shot/reverse shot sequence or an establishing shot/close-up sequence, though this system of suture can work in a surprising variety of ways. The goal is to obfuscate the cuts by embedding them in subjectifying shots. A successful suture enforces a passivity in the viewer by not disrupting one’s subjective relationship to the film. Transparency of form is the telos of classic cinematic editing.

The cut is both a cause of the rift in the viewer’s subjectivity and the agent of its abridgement. The formed subject can be found situated between these two alternating impulses. The transparency of the cut is the motive force in the system of suture. Yet, in hypervideo the cut is never transparent. It is, in fact, foregrounded and manifest physically in many of the hypervideo modalities discussed above. It may be argued that a well-constructed hypervideo offers link opportunities that emulate the classical system of suture. For example, clicking on a particular clip might trigger another clip that complements the former in a way that comfortably places the subject in relation to the two scenes. This kind of classical segue does not feel natural in hypervideo, partially because it quickly becomes evident that the filmic system of suture depends heavily on the immersive nature of the cinematic apparatus. Conversely, the hypervideated subject is constantly made aware of the opacity of the interface. Even in the pause-and-launch modality hypervideo cannot effect cinematic suture since cuts, traditional though they may be, are splayed out temporally and spatially. In the montage of still images of what-has-come-before the hypervideo does not let the subject forget its previous positions. Indeed, the inability to assimilate the entire visual field in hypervideo prevents the link-as-cut from ever effacing itself as would be necessary in a hypervideo system of suture.

So, if the transparent cut does not belong to the formal characteristics of hypervideo, how then is subjectivity constructed in this new medium? What perspective, what angle, which character offers hypervideo viewers a vantage from which to integrate themselves into the narrative? Because the environment in which hypervideo is presented differs considerably from the cinematographic apparatus ("the darkness of the theater, the relative passivity of the situation, the forced immobility of the cine-subject") filmic subjectivity is not an entirely adequate template for understanding the new medium. The television set which in form and in its technology of projection resembles the computer monitor of hypervideo does however offer a starting point for discussion. TV’s paradigm of vision has been called the "regime of the glance" in opposition to the entrenched gaze theories of psychoanalytic film criticism. This theory of the glance posits a less active looker than the cinema spectator. Since television is always psychologically "on" even when the actual box is not, there is little sense of it being meant for the viewer in the way that the cinema seems to consent to the entry of the spectator into the visual transaction. The absent-one of the cinema is replaced by an intimate "co-presence" of the televisual image and viewer. John Ellis considers this co-presence partly an effect of the displacement of cinematic scopophilia by a related "invocatory drive," listening, the aural equivalent of voyeurism. The omni-directionality of sound, constantly entreating the subject to look towards the screen, works to stitch the fragmented glances into a unified whole.

Hypervideo, of course, is not television. It is not always on and through the various invitations to interactivity it perhaps solicits the attention of the viewer (situated as a user at the computer terminal) with more success than the total flow of television. Nevertheless, the televisual subject is partially present in its hypervideated cousin. The voyeuristic urge does exist in hypervideo, but it is dispersed and not always scopic. Paradoxically, one simply cannot assimilate the whole visual field of the small hypervideo screen in the way that the cinematic screen can be taken in (or, rather, in the way that the system of suture can take in the viewer). The hypervideated subject is formed by the alternation between this schizophrenic attempt at spatial assimilation and the interactive intimacy of the video image on screen. Just when the video clips seem to fragment beyond the ability of the viewer to establish a stable vantage, the interface solicits interactivity, placing the subject back into a position of intimacy. This process is an analogue of the way that the televisual subject is constructed by the vacillation between a schizophrenic attempt at assimilating the temporal total flow of TV and the frequently interjected direct addresses to the viewer (by commercials, documentaries, news anchors, and even fictive programs). Like the cinematic subject positioned between the awareness of an "absent-one" and an identification with a stable filmic subject, the hypervideated subject exists in suspension between cognitive overload and intimate control.

The third and final genre from which hypervideo derives is hypertext fiction. Hypertextual structure is the ghost in the machine, if you will, for hypervideo. It is always present in the underlying conception of the authors who use a rudimentary link-node authoring environment to build the hypervideo, though more often than not text does not play a part in the actual presentation. Subjectivity in hypertext – still a largely unexplored field of inquiry – offers perhaps the most robust model for hypervideated subjectivity. Here we return to suture, not at the level of the signifier (as in the cinematic model) but at one level of abstraction higher, in the plane of diachronic discourse. The plight of the hypertextual subject is not unlike that of the hypervideated subject: both must negotiate a fragmented collection of materials that seems to resist closure and linear meaning-making. Terry Harpold argues that in hypertext it is a "narrative suture [that] shores up the gaps in hypertextual discourse." The mere idea that behind the dismembered text lies a recoverable narrative (a kind of textual embodiment of the subject-supposed-to-know) is enough to bandage the gaps and disjunction, to legitimize "the link’s function as a marker of connection and integration, rather than one of division and fragmentation." As Harpold notes, the narrative may not exist ("if you take apart a knot, there is nothing in it"), but the idea that it might is enough to bind together the subjective gap. This, I think, is the most compelling argument for a system of suture at work in hypervideo. Absence is not manifest in hypervideo by the intra-frame cut or edit but rather by the visually dismembered structure of the video clips themselves. The hypervideated subject is reconstituted at the level of narrative whereas the cinematic subject derives mostly from cinematographic conventions and technical organization of filmic space.

For now, we may adopt Harpold’s formulation of hypertextual suture for the hypervideo media form – with one emendation. Hypertext and hypervideo, though sharing structural features, differ fundamentally in their relation to time. As in film, hypervideo’s movement in time is inexorable and unalterable. Though video clips often loop and a sense of forward progress is often absent, the clips themselves play through without stopping. In pause-and-launch the stopped clip is merely a visual reminder of where the new playing clip originated. The moving image is always moving in hypervideo – the subject can only redirect the flow. In hypertext, which after all is little more than a collection of static text nodes, blockage occurs regularly. It is at these moments of obstruction that the effects of narrative suture rescue the subject from despair or abandonment. The incessant flow of hypervideo precludes blockage in the strict sense: there is never nowhere to go since the video flow is always going somewhere even if that somewhere is back to the origination of the clip. This necessarily linear movement in time functions like the story line itself, threading a course on top of the disjunct video clips. I propose thinking of subjectivity in hypervideo as a dissolving suture, a temporary articulation of the lacerated video corpus that is constantly being assimilated into the corpus itself. Because of the disjunctive structure of the hypervideo the subject never has time to establish a firm perspectival footing (as in cinematic suture), but at the same time the inexorable inertia of the moving image bandages the gap created by disjunction. The suture in hypervideo – a medium in perpetual temporal flux – exists in a permanent state of dissolution.

Acknowledgements

For their help and inspiration at various stages of this project I would like to extend special thanks to David Balcom, Jay Bolter, Matthew Causey, Ed Curry, Mikol Graves, Terry Harpold, Michael Joyce, Mike Koetter, Nick Sawhney, Ian Smith, Ryan Todd, Phil Walker and especially to my wife Robyn.

References

Balcom, David. Hypervideo: Notes Toward a Rhetoric. M.S. Thesis, 1996.

http://www.lcc.gatech.edu/gallery/hypercafe/David_Project96/contents.html

Baudry, Jean-Louis. "The Apparatus: Metapsychological Approaches to the Impression of

Reality in Cinema." Film Theory and Criticism, 4th edition. Gerald Mast, et al. Oxford: Oxford University Press, 1992.

Cooper, Alan. About Face: The Essentials of User Interface Design. Chicago: IDG Books,

1995.

Ellis, John. "Broadcast TV as Sound and Image" (from Visible Fictions). Film Theory and

Criticism, 4th edition. Gerald Mast, et al. Oxford: Oxford University Press, 1992.

Gaggi, Silvio. From Text to Hypertext: Decentering the Subject in Fiction, Film, the Visual Arts,

and Electronic Media. Philadelphia: University of Pennsylvania Press, 1997.

Graves, Mikol and Ryan Todd. Navigating the Möbius Strip: Toward Creating the

Replenishable Text. M.S. Project Paper, 1997.

http://www.mindspring.com/~rtodd/mobius/mobpaper.html

Harpold, Terry. "Threnody: Psychoanalytic Digressions on the Subject of Hypertexts"

Hypermedia and Literary Studies. Paul Delany and George Landow, eds. Cambridge, Mass.: The MIT Press, 1991.

Jameson, Fredric. Postmodernism or, The Cultural Logic of Late Capitalism. Durham, N.C.:

Duke University Press, 1991.

Joyce, Michael. Of Two Minds: Hypertext Pedagogy and Poetics. Ann Arbor: University of

Michigan Press, 1995.

Landow, George P. Hypertext 2.0: The Convergence of Contemporary Critical Theory and Technology. Baltimore: The Johns Hopkins University Press, 1997.

Liestøl, Gunnar. "Aesthetic and Rhetorical Aspects of Linking Video in Hypermedia."

Proceedings of the European Conference on Hypermedia Technology. (1994) 217-223.

Miller, Jacques-Alain. "Suture (elements of the logic of the signifier)" Screen. 17:3 (1976) 24-

34.

Nash, Michael. "Vision After Television: Technocultural Convergence, Hypermedia, and the

New Media Arts Field." Resolutions: Contemporary Video Practices. Michael Renov and Erika Suderburg, editors. Minneapolis: University of Minnesota Press, 1996.

Oudart, Jean-Pierre. "Cinema and Suture." Screen. 17:3 (1976) 35-47.

Sawhney, Nitin. Authoring and Navigating Video in Space and Time: A Framework and

Approach towards Hypervideo. M.S. Project Paper, 1996. http://www.lcc.gatech.edu/gallery/hypercafe/Nick_Project96/hypervideo.html

Silverman, Kaja. The Subject of Semiotics. Oxford: Oxford University Press, 1983.

Tognazzini, Bruce. Tog on Interface. New York: Addison Wesley, 1992.

Weinbren, Grahame. "The Digital Revolution is a Revolution of Random Access." Telepolis.

http://www.heise.de/bin/tp-issue/tp.htm?artikelnr=6113&mode=html.

--------. "In the Ocean of Streams of Story." Millennium Film Journal. 28 (Spring 1995):

http://www.sva.edu/MFJ/journalPages/MFJ28/GWOCEAN.HTML

| Return to Main Page |