Supercharged culture-geeking

This past Monday, IBM and the Office of Digital Humanities of the NEH convened a bunch of smart folks to talk about what humanities scholars would do with access to a supercomputer, real or distributed. I had been looking forward to this discussion for months, if not years in the abstract. It was a wonderful convergence of two of my life interests.

We had a broad representation of disciplines — a librarian, a historian, a few English profs, an Afro-American studies professor, some freakishly accomplished computer scientists, and a bunch of “general unclassifiable” folks who perfectly straddle the worlds of technology and culture.

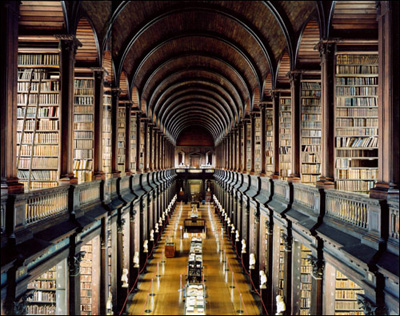

The Library of Trinity College, Dublin

The grid has about a million devices on it and packs some serious processing power, but to date the only projects that have run on it have been in the life sciences. We were trying to think beyond that yesterday.

My job was to pose some questions to help form problems — mostly because, outside the sciences, researchers just don’t think in terms of issues that need high performance computing. But that doesn’t mean they don’t exist. It’s funny how our tools limit how we even conceptualize problems.

On the other hand you might argue that this is a hammer in search of a nail. OK, fine. But have you seen this hammer?

Here’s some of what I asked:

- Are there long-standing problems or disputes in the humanities that are unresolved because of an inability to adequately analyze (rather than interpret)?

- Where are the massive data sets in the humanities? Are they digital?

- Can we think of arts and culture more broadly than typical: across millennia, language, or discipline?

- Is large-scale simulation valuable to humanistic disciplines?

- What are some disciplinary intersections that have not been explored for lack of suitable starting points of commonality?

- Where is pattern-discovery most valuable?

- How do we formulate large problems with non-textual media?

I also offered some pie-in-the-sky ideas to jumpstart discussion, all completely personal fantasy projects. What if we …

- Perform an analysis of the entire English literary canon looking for rivers of influence and pools of plagiarism. (Literary forensics on steroids.)

- Map global linguistic “mutation” and migration to our knowledge of genetic variation and dispersal. (That’s right, get all language geek on the Genographic project!)

- Analyze all French paintings ever made for commonalities of approach, color, subject, object sizes.

- Map all the paintings in a given collection (or country) to their real world inspirations (Giverny, etc.) and provided ways to slice that up over time.

- Analyze imagery from of satellite photos of the jungles of southeast Asia to try to discover ancient structures covered by overgrowth.

- Determine the exact order of Plato’s dialogues by analyzing all the translations and “originals” for patterns of language use.

(Due credit for the last four of these go to Don Turnbull, a moonlighting humanist and fully-accredited nerd.)

Discussion swirled around but landed on two major topics both having to do with the relative unavailability of ready-to-process data in the humanities (compared to that in the sciences). Some noted that their own data sets were, at maximum, a few dozen gigabytes. Not exactly something you need a supercomputer for. The question I posed — where is the data? — was always in service of another goal, doing something with it.

But we soon realized that we were getting ahead of ourselves. Perhaps the very problem that massive processing power could solve was getting the data into a usable form in the first place.

The Great Library of the Jedi, Coruscant

At present it seems to me — I don’t speak for IBM here — that the biggest single problem we can solve with the grid in the humanities isn’t discipline-specific (yet), but is in taking digital-but-unstructured data and making it useful. OCR is one way, musical notation recognition and semantic tagging of visual art are others — basically any form of un-described data that can be given structure through analysis is promising. If the scope were large enough this would be a stunning contribution to scholars and ultimately to humanitiy.

The possibilities make me giddy. Supercomputer-grade OCR married to 400,000 volunteer humans (the owners/users of the million devices hooked to the grid) who might be enjoined to correct OCR errors, reCAPTCHA-style. Wetware meets hardware, falls in love, discuses poetry.

The other topic generating much discussion was grid-as-a-service. That is, using the grid not for a single project but for a bunch of smaller, humanities-related projects, divorcing the code that runs a project from the content that a scholar could load into it. You’d still need some sort of vetting process for the data that got loaded onto people’s machines, but individual scholars would not have to worry about whether their project was supercomputer-caliber or what program they would need to run. In a word, a service.

Who knows if either of these will happen. It’s time now to noodle on things. As always, if you have ideas for how you might use a humanitarian grid to solve a problem in arts or culture, drop a line. We’re open to anything at this point.

A few months ago Wired proclaimed The End of Theory, basically noting that more and more science is not being done in the classical hypothesize-model-test mode. This they claim is because we now have access to such large data sets and such powerful tools for recognizing patterns that there’s no need to form models beforehand.

This has not happened in arts and culture (and you can argue that Wired overstated the magnitude of the shift even in the sciences). But I have to believe that access to high performance computing will change the way insight is derived in the study of the humanities.